Photo by David Marcu on Unsplash

—

Who said practising GitOps always requires complex tooling and expensive infrastructure, even for simple scenarios ? In this article, we will demonstrate how to setup (and run) a GitOps environment with K3D and Argo CD for local development.

Operating an application the GitOps way on a local computer can be useful in multiple scenarios:

What if we could have an inexpensive and hassle-free way to get a complete Kubernetes environment close enough to staging and production ?

—

k3d as described on its website:

K3D is a lightweight wrapper to run k3s (Rancher Lab’s minimal Kubernetes distribution) in docker. k3d makes it very easy to create single- and multi-node k3s clusters in* Docker, e.g. for local development on Kubernetes.

It is a cost-effective solution for developers to locally provision lightweight Kubernetes clusters. Finally, with ArgoCD synching the cluster resources with our git hosted manifests, we get a first-class, costless, GitOps oriented workflow with no big compromise !

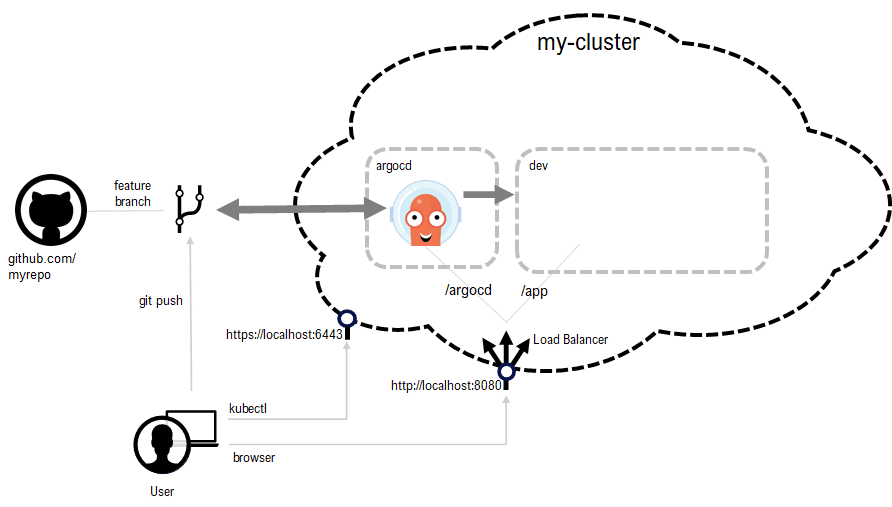

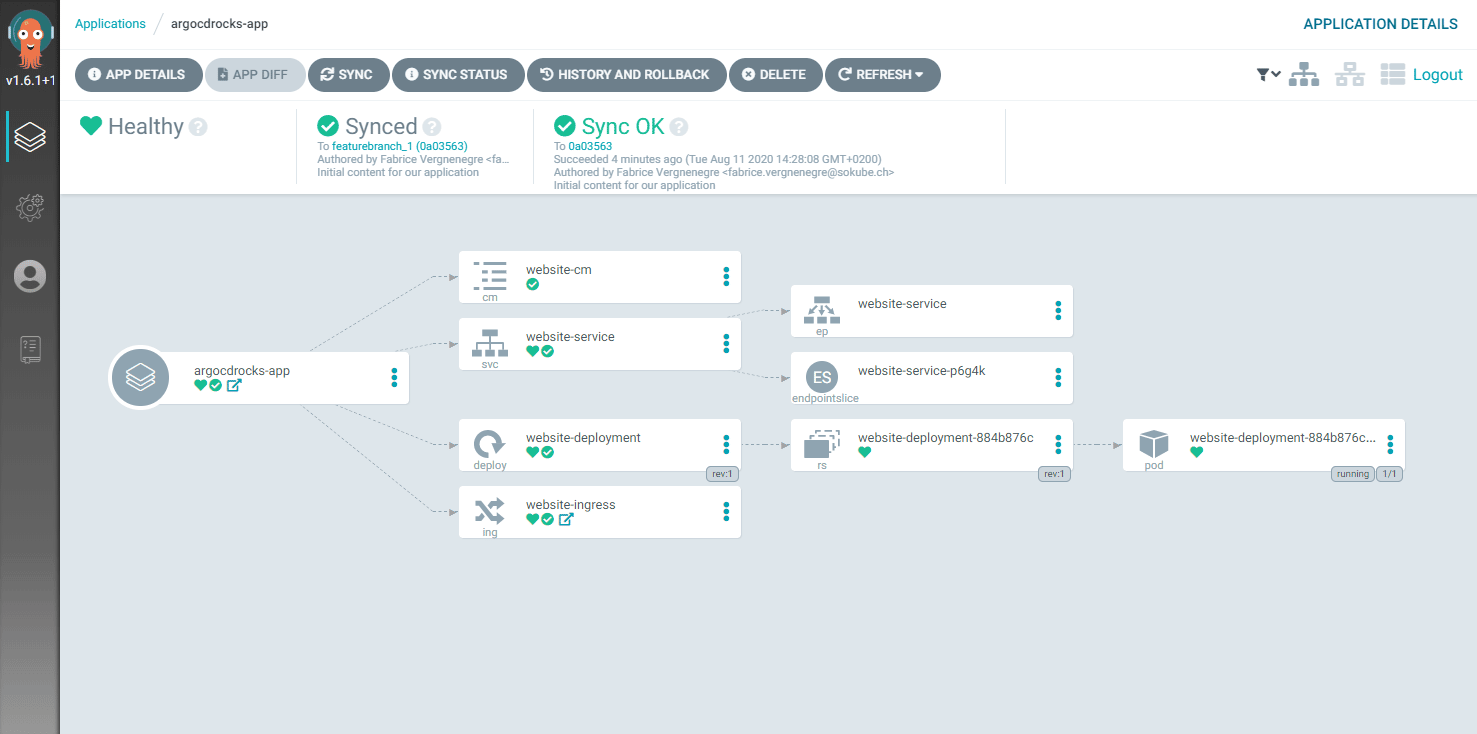

This is a graphical overview of the setup we’re going to achieve with this article. We will focus our scope only on feature branches: ideally your other branches (staging, release branch, master) are already covered by the CI/CD pipelines on dedicated clusters. Our approach is the pull approach, as the changes are detected and driven from within the cluster (cluster credentials aren’t exposed outside).

Our local cluster will have two namespaces:

ArgoCD will keep in sync the kubernetes manifests of our application hosted on a Github repository branch and a the dedicated local dev namespace.

—

We’ve covered already k3d and k3s in a previous post. In the meantime, k3d has been significantly rewritten and gets only better and better, but some of the commands aren’t compatible with the previous versions. For this article we’re gonna use the freshly released v3.0.0 by following the installation instructions. Our local environment is an Ubuntu 20.04.

$ sudo wget https://github.com/rancher/k3d/releases/download/v3.0.0/k3d-linux-amd64 -O /usr/local/bin/k3d

$ sudo chmod +x /usr/local/bin/k3dWe verify the setup is correct by running k3d version command:

$ k3d version

k3d version v3.0.0

k3s version v1.18.6-k3s1 (default)We can install the auto-completion scripts (which are really helpful) using the k3d completion

$ echo "source <(k3d completion bash)" >> ~/.bashrc

$ source ~/.bashrcLet’s setup our cluster with 2 worker nodes (–agents in k3d command line) and expose the HTTP load balancer on the host on port 8080 (so that we can interact with our application)

$ k3d cluster create my-cluster --api-port 6443 -p 8080:80@loadbalancer --agents 2By default, creating a new cluster will:

So you can directly use the kubectl command:

$ kubectl cluster-info—

Argo CD is a declarative, continuous delivery tool for Kubernetes based on the GitOps approach.

Application definitions, configurations, and environments should be declarative and version controlled. Application deployment and lifecycle management should be automated, auditable, and easy to understand.

Download ArgoCD 1.6.2 installation yaml:

$ wget https://github.com/argoproj/argo-cd/raw/v1.6.2/manifests/install.yamlWe want ArgoCD to be available on /argocd so we need to change the application root path with the –rootpath option in the argocd server container command (right after the –staticassets /shared/app lines) and also add the –insecure option:

...

spec:

containers:

- command:

- argocd-server

- --staticassets

- /shared/app

# Add insecure and argocd as rootpath

- --insecure

- --rootpath

- /argocd

image: argoproj/argocd:v1.6.2

imagePullPolicy: Always

...Once done, create the argocd namespace and install ArgoCD with the modified script

$ kubectl create namespace argocd

$ kubectl apply -f install.yaml -n argocdCreate an Ingress to redirect /argocd to the argocd main service:

$ cat > ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: argocd-ingress

labels:

app: argocd

annotations:

ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- http:

paths:

- path: /argocd

backend:

serviceName: argocd-server

servicePort: 80

EOF

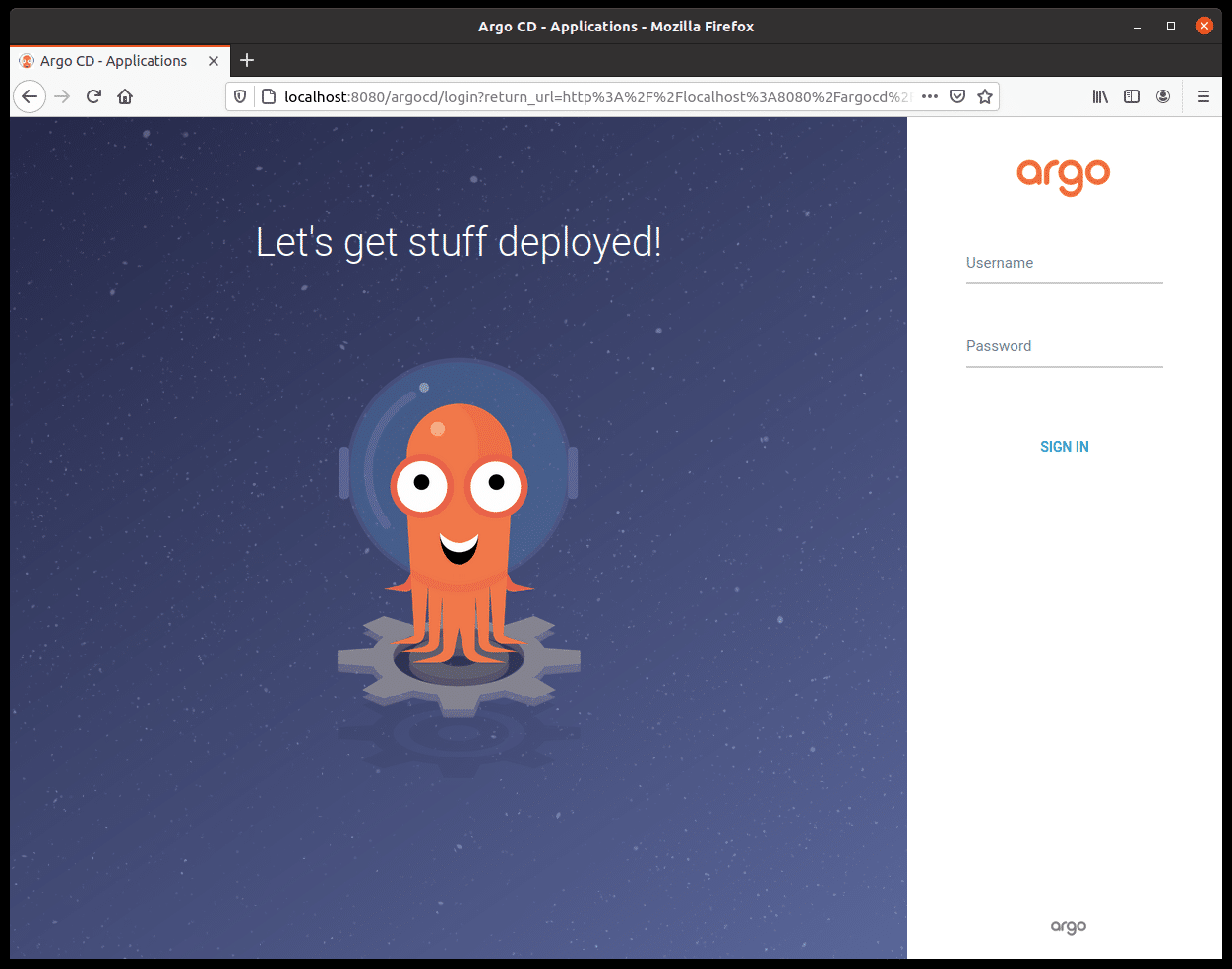

$ kubectl apply -f ingress.yaml -n argocdOpen a browser on http://localhost:8080/argocd. Please note that the installation might require some time to complete.

By default ArgoCD uses the server pod name as the default password for the admin user, so we’re gonna replace it with mysupersecretpassword (we used https://bcrypt-generator.com/ to generate the blowfish hash version of "mysupersecretpassword" below)

kubectl -n argocd patch secret argocd-secret

-p '{"stringData": {

"admin.password": "$2y$12$Kg4H0rLL/RVrWUVhj6ykeO3Ei/YqbGaqp.jAtzzUSJdYWT6LUh/n6",

"admin.passwordMtime": "'$(date +%FT%T%Z)'"

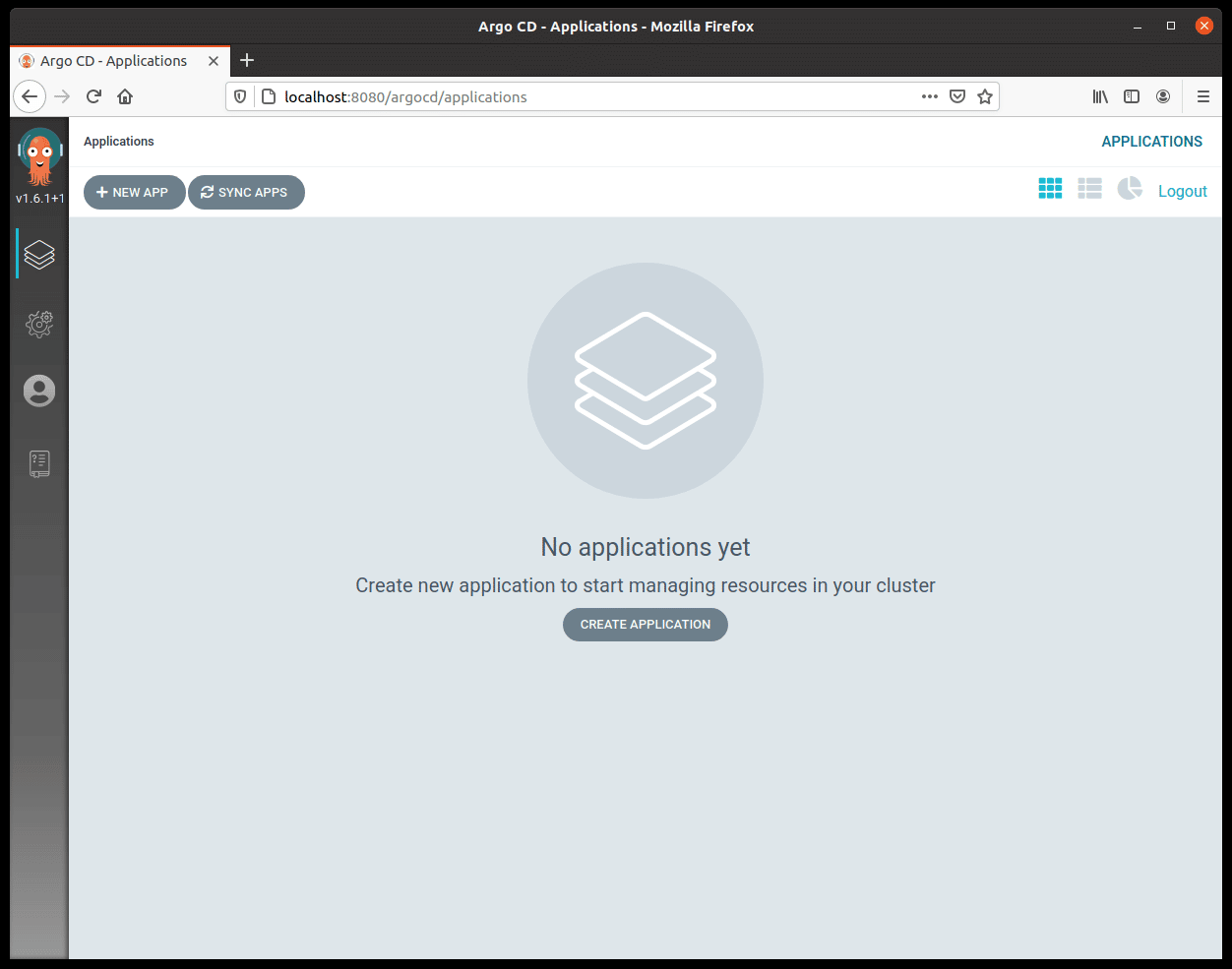

}}'Now use the credentials admin and mysupersecretpassword as password and we should get an empty and ready-to-use ArgoCD instance!

You might want to explore a bit the UI, but as we want to automate most of our setup, it’s better to not configure anything manually.

—

We will use a public repository on GitHub as an example here, but ArgoCD is agnostic on the hosting of the application manifests as long as they adhere to the git protocol.

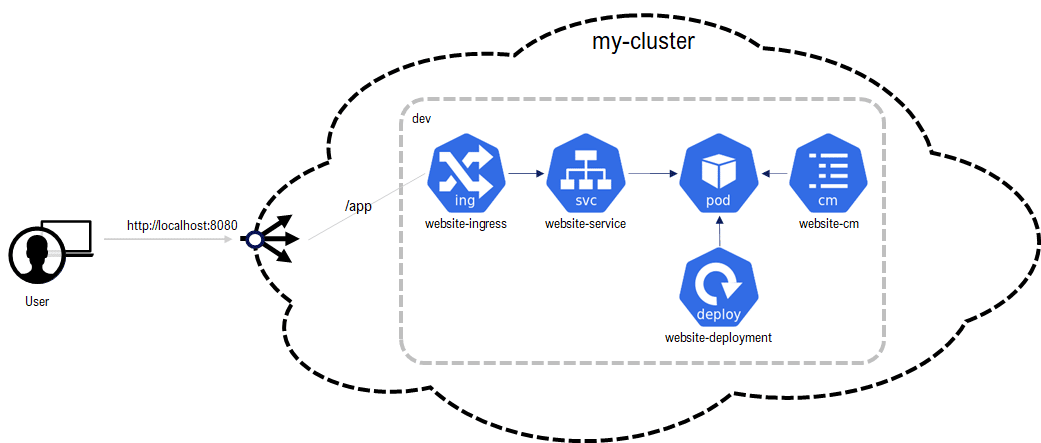

Our sample application is a Deployment of an nginx web server with some content (a ConfigMap storing the website and mounted as a volume), a Service and an Ingress to make it accessible on /app:

The sample application is hosted publicly on github: https://github.com/sokube/argocd-rocks

As ArgoCD syncs a namespace content with the manifests under a path inside a git repository, we need to create beforehand a dedicated namespace (dev):

$ kubectl create namespace devWe want our work inside ArgoCD to be hosted in a dedicated project named argocdrocks-project. We voluntarily put some restrictions in this project, that will apply to all applications that inside this project: allow only in-cluster deployments in the dev namespace and only for the Sokube github repositories.

$ cat > project.yaml << EOF

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: argocdrocks-project

labels:

app: argocdrocks

spec:

# Project description

description: Our ArgoCD Project to deploy our app locally

# Allow manifests to deploy only from Sokube git repositories

sourceRepos:

- "https://github.com/sokube/*"

# Only permit to deploy applications in the same cluster

destinations:

- namespace: dev

server: https://kubernetes.default.svc

# Enables namespace orphaned resource monitoring.

orphanedResources:

warn: false

EOF

$ kubectl apply -f project.yaml -n argocdNext, we will create an ArgoCD Application which will synchronize our Kubernetes manifests hosted in the app folder on our github repository feature branch featurebranch_1 with the associated resources inside the dev namespace on our local cluster:

$ cat > application.yaml << EOF

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

labels:

app: argocdrocks

name: argocdrocks-app

spec:

project: argocdrocks-project

source:

repoURL: https://github.com/sokube/argocd-rocks.git

targetRevision: featurebranch_1

path: app

directory:

recurse: true

destination:

server: https://kubernetes.default.svc

namespace: dev

syncPolicy:

automated:

prune: false

selfHeal: true

EOFLet’s stop a moment on the syncPolicy section of the ArgoCD Application definition:

$ kubectl apply -f application.yaml -n argocdThe synchronization is immediate, and our various resources get created inside our dev namespace:

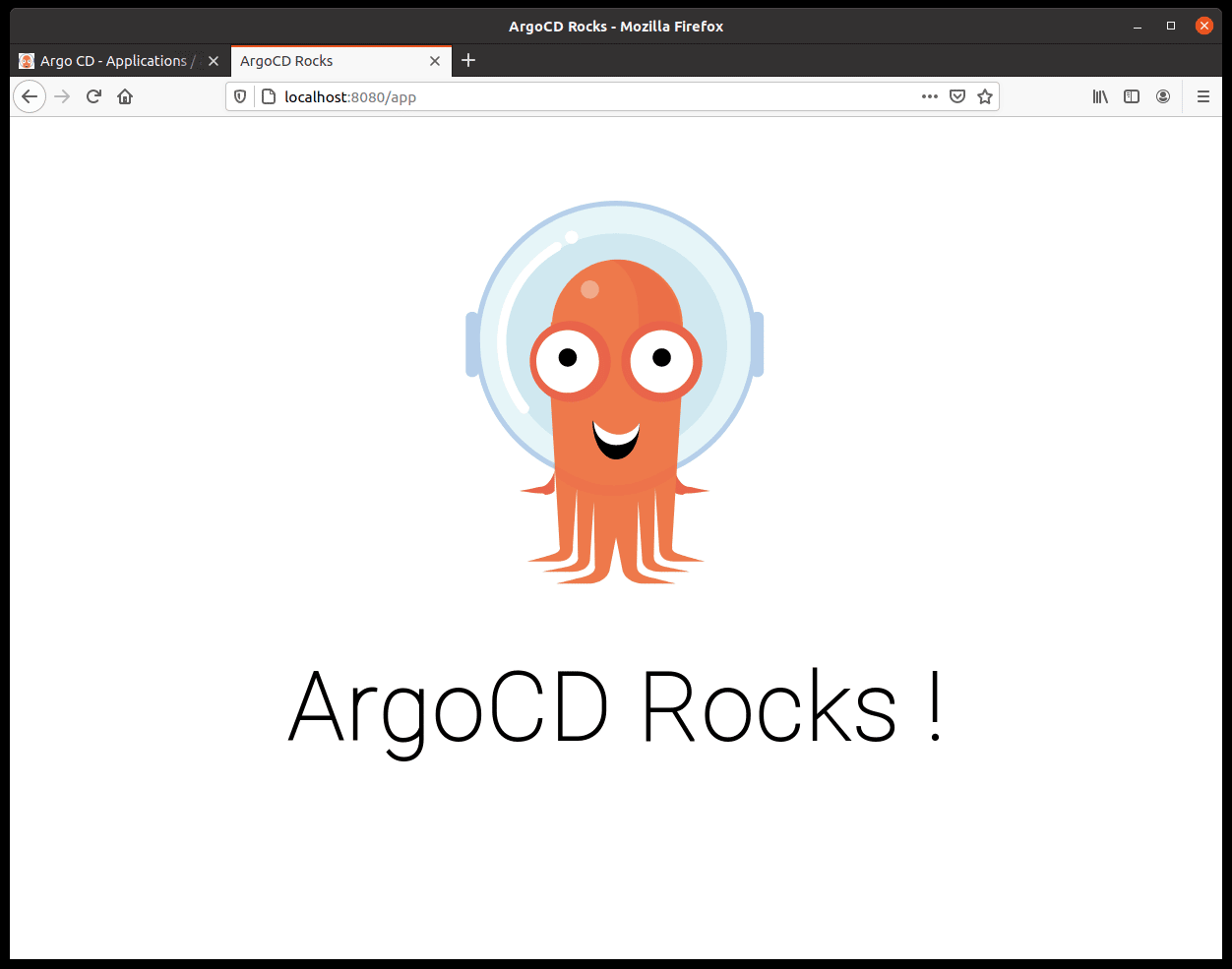

And our application is correctly found in /app at the localhost:8080 address :

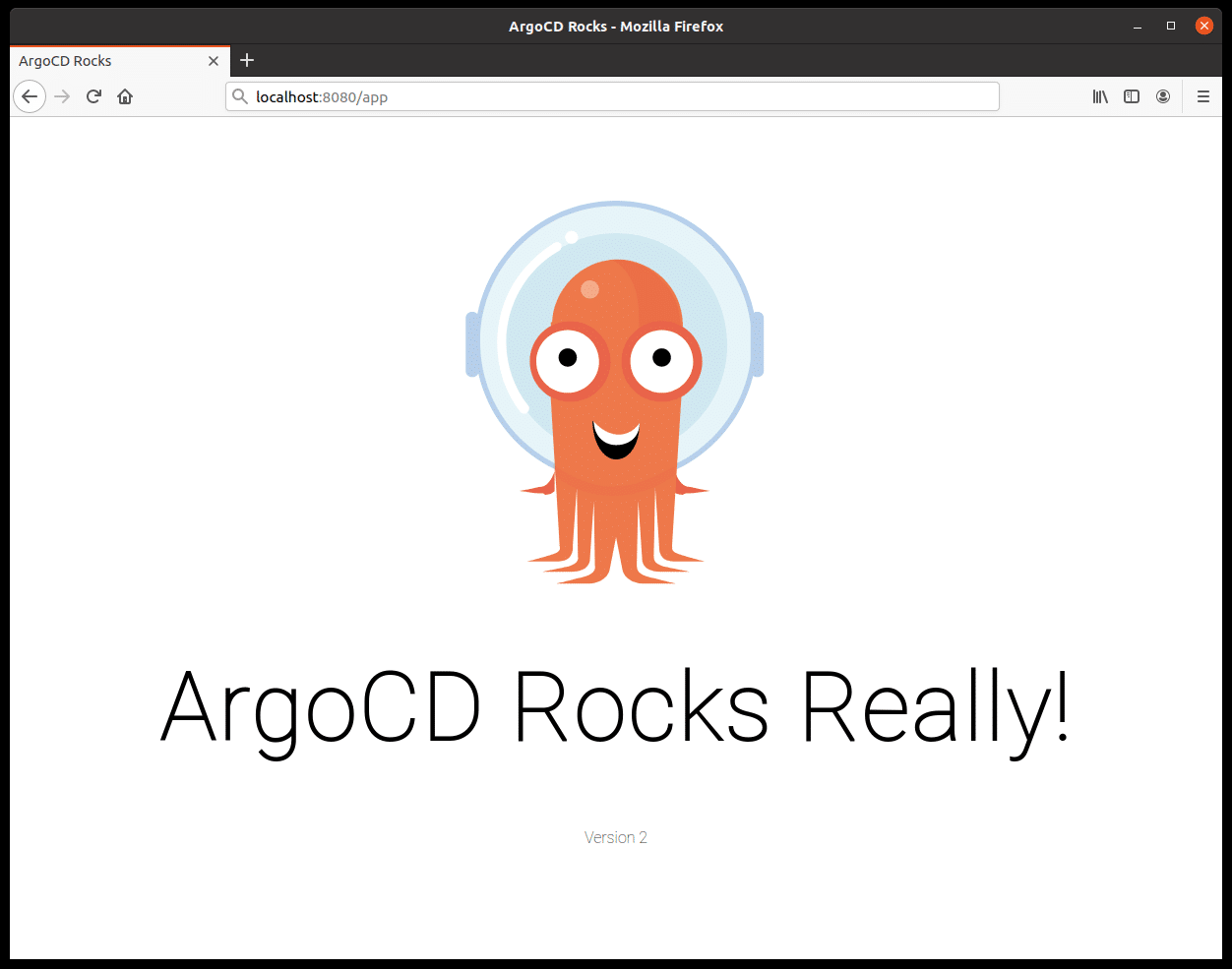

Any subsequent change in our feature branch will be picked by ArgoCD (which regularly polls the git repository) and applied inside our cluster. Let’s modify website-cm.yaml inside our feature_branch1 branch:

...

<h1>ArgoCD Rocks Really!</h1>

<p>Version 2</p>

...We commit, push, and watch ArgoCD pick up the changes and apply them. Here, updating the ConfigMap doesn’t trigger a redeployment, but as the ConfigMap is mounted as a volume, the change is picked by Kubernetes’ kubelet after one minute or so.

Through this quick article, we’ve been able to demonstrate and experiment the usage of ArgoCD by using Git as a Source of Truth for the application deployment. Combined with the lightweight K3D distribution from RancherLabs, both can easily run on a laptop computer for experimentation purposes.

In a real-life scenario, ArgoCD would be part of a secured enterprise CI/CD pipeline to deploy workloads on production Kubernetes clusters.

GitOps operators such as ArgoCD or FluxCD definitely help in implementing Operations by Pull-Requests scenarios. Both projects, respectively incubating and sandbox CNCF projects, have indeed decided to join forces to bring a unified, perhaps soon standardized, GitOps experience to the Kubernetes community…