By Yann Albou.

This blog post was originally published on Medium

Docker, Containerd, CRI-O, are all about containers but what are the differences, the pros and cons and the purpose of having several « docker implementations », are they all kubernetes compatible.

The multiplicity of container engines and tools like crictl, podman, buildha, skopeo, kaniko , jib, … around containers shows the need of evolution and adaptability in the kubernetes world.

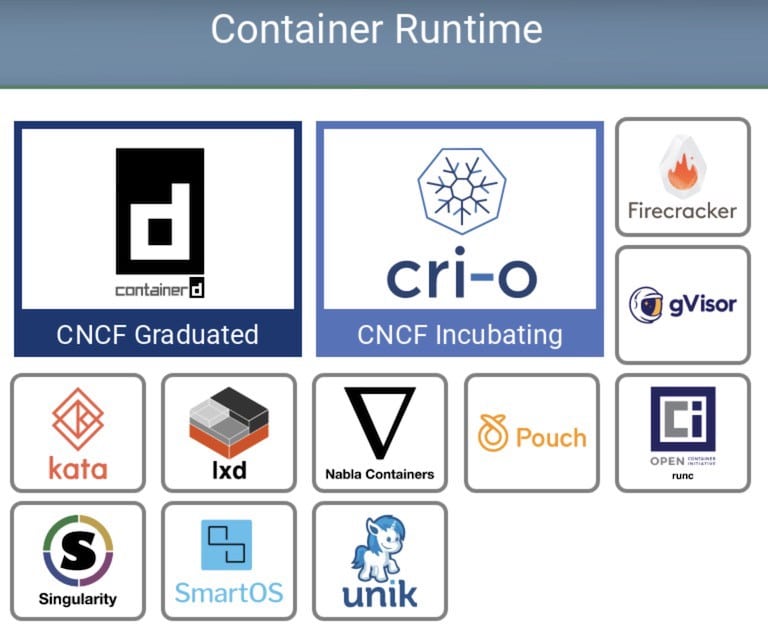

The Cloud Native Computing Foundation (CNCF) that includes the Kubernetes project has created a landscape map with a container runtime section: https://landscape.cncf.io/images/landscape.png :

It is not an exhaustive list but it shows the growth of container runtime for Kubernetes.

Docker has been the de facto standard for a long time, originally aimed at extending the capabilities of Linux Containers (LXC), Docker was created as an open-source project in 2013 The company’s solution is now the leading software containerisation platform on the market. Using LXC, Docker acts as a portable container engine for packaging applications and dependencies into containers easily deployable on any system.

Before Docker 1.11 (May 2016), the Docker engine daemon was in charge of downloading container images, launching container processus, exposing an API to interact with the daemon, … and all within a single central process with root privileges.

This convenient approach is useful for deployment but having a monolithic container runtime that manages both images and containers doesn’t follow best practices regarding process separation and Unix privileges.

Established in June 2015 by Docker and other leaders in the container industry (and donated to the Linux Foundation), the OCI currently contains two specifications:

But runc is a low level container runtime without API. (like gVisor, Kata Containers, or Nabla Containers)

For Dev and Sys Admin we need a high level container runtime providing an API and a CLI allowing to interact easily with images: this is Docker

But for breaking up more modularity to Docker architecture and more neutrality regarding the other industry actors Docker introduced Containerd that act as an API facade to containers runtime (runc in this case)

Containerd has a smaller scope than Docker (For instance it cannot build images), provides a client API, and is more focused on being embeddable.

To run a container, Docker engine creates the image, pass it to Containerd. Containerd calls « containerd-shim » that uses runC to run the container.

The shim allows for daemon-less containers. It basically sits as the parent of the container’s process to facilitate few things (like avoiding the long running runtime processes for containers).

So since Docker 1.11, docker is built upon runC and Containerd and so it was the first OCI compliant runtime release

But it was not enough for Kubernetes.

Each container runtime has its own strengths, and it was mandatory for Kubernetes to support more runtimes.

It is why Kubernetes 1.5 introduced the Container Runtime Interface (CRI) which enables kubelet (the kubernetes component installed on each worker node and in charge of the container lifecycle) to use a wide variety of containers runtime, without the need to recompile.

Supporting interchangeable containers runtime was not a new concept in Kubernetes but at the beginning both Docker and rkt were integrated directly and deeply into the kubelet source code through an internal and volatile interface.

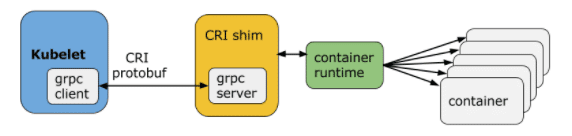

Kubelet communicates with the container runtime (or a CRI shim for the runtime) over Unix sockets using the gRPC framework, where kubelet acts as a client and the CRI shim as the server.

The protocol buffers API includes two gRPC services, ImageService, and RuntimeService. The ImageService provides RPCs to pull an image from a repository, inspect, and remove an image. The RuntimeService contains RPCs to manage the lifecycle of the pods and containers, as well as calls to interact with containers (exec/attach/port-forward). A monolithic container runtime that manages both images and containers (e.g., Docker and rkt) can provide both services simultaneously with a single socket.

image from https://kubernetes.io

To implement a CRI integration with Kubernetes for running containers, a container runtime environment must be compliant with the runtime specification of the Open Container Initiative (as described above).

Since Kubernetes 1.10 Containerd 1.1 and above is production ready

It exists several CRI implementations:

A direct consequence of those informations are that Docker is not a mandatory piece of Kubernetes.

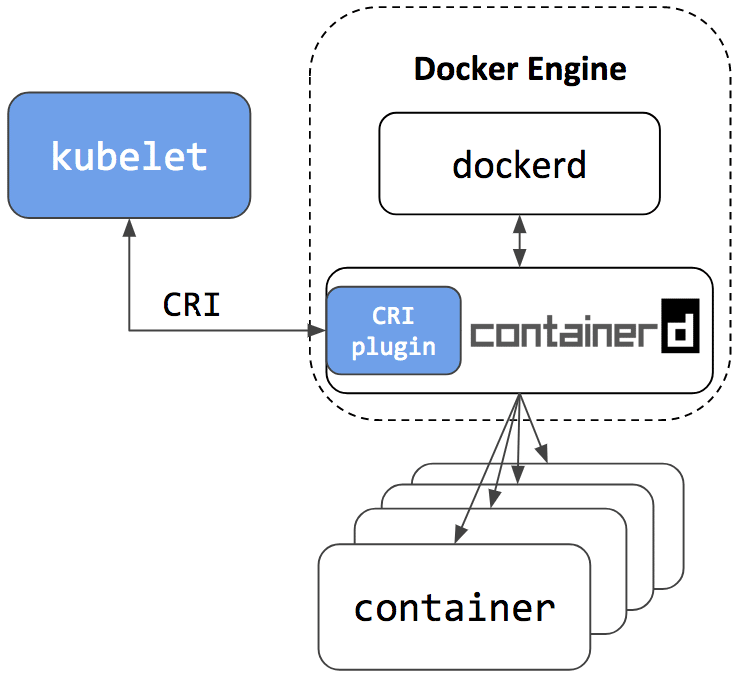

As previously mentioned Docker now rely on Containerd so this means users will have the option to continue using Docker Engine for other purposes typical for Docker users, while also being able to configure Kubernetes to use the underlying containerd that came with and is simultaneously being used by Docker Engine on the same node:

image from https://kubernetes.io

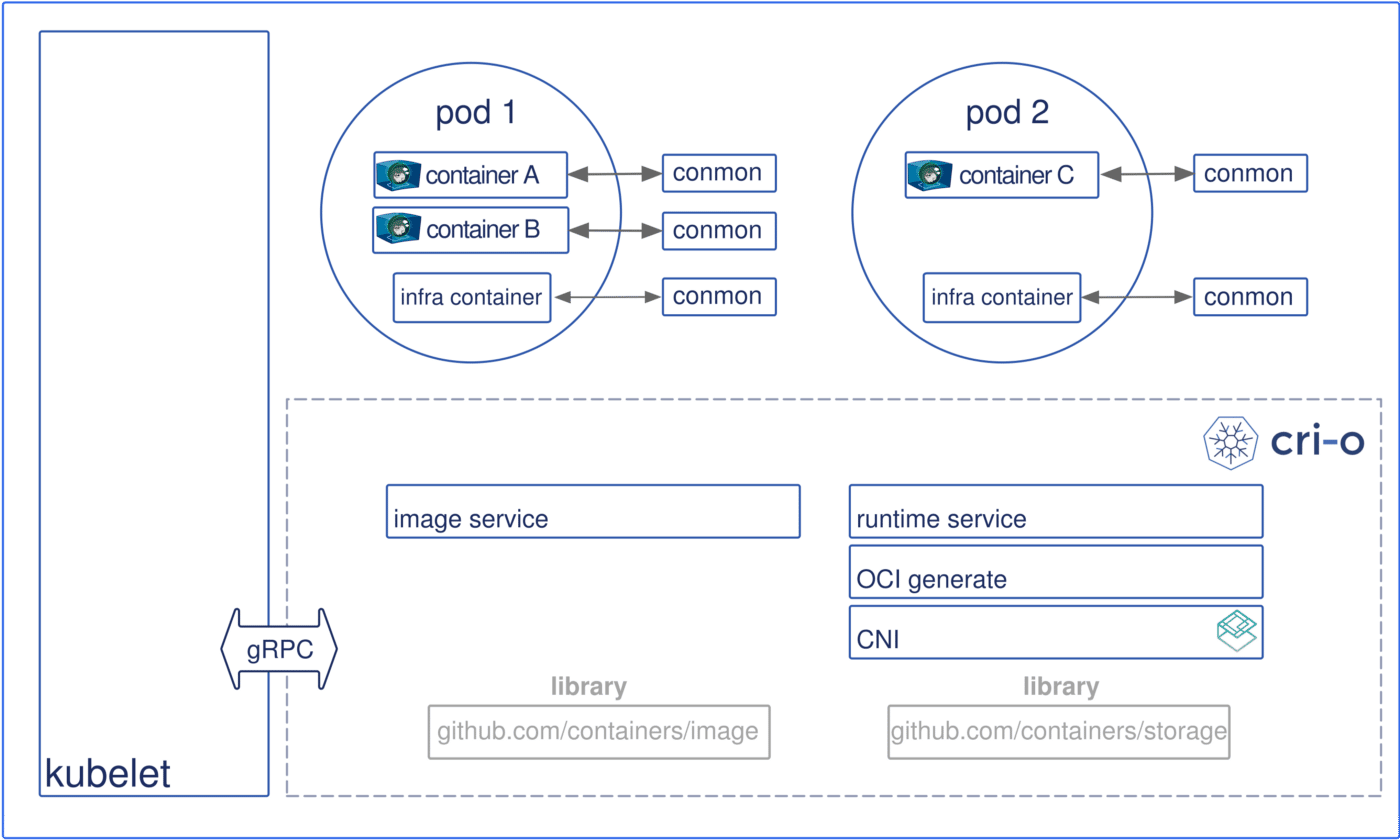

Cri-o is another high level CRI runtime made only for Kubernetes that supports low-level runtimes like runc and Clear Containers (part of Kata container).

The main contributors of CRI-O are RedHat, IBM, Intel, Suse and Hyper

The global architecture of CRI-O looks like:

image from https://cri-o.io

So without Docker how to interact in command line with high level Kubernetes containers runtime and images ?

crictl is a tool providing a similar experience to the Docker CLI for Kubernetes node troubleshooting and crictl works consistently across all CRI-compatible containers runtime. Its goal is not to replace Docker or kubectl but provides just enough commands for node troubleshooting, which is safer to use on production nodes. It also offers a more kubernetes-friendly view of containers using pod and namespace notions. It is used internally by the Kubernetes project to test an validate the Kubelet CRI

so for instance you can do:

crictl ps

crictl exec --it mycontainrid shOther useful tools that don’t rely on docker:

We already talked about runc as probably the most popular low level container runtime, but it exists also pretty interesting projects (part of the CNCF landscape in the container runtime section) :

kata containers provides a higher isolation (like VMs) but that can be easily plugged into all the tooling we have around containers, this means you can spin up these VMs (or we could say kata containers) through Kubernetes.

"Kata Containers is an open source community working to build a secure container runtime with lightweight virtual machines that feel and perform like containers, but provide stronger workload isolation using hardware virtualization technology as a second layer of defense."

In the same area there is gVisor from Google that creates a specialized guest kernel for running containers. It limits the host kernel surface accessible to the application.

"gVisor is a user-space kernel, written in Go, that implements a substantial portion of the Linux system surface. It includes an Open Container Initiative (OCI) runtime called runsc that provides an isolation boundary between the application and the host kernel. The runsc runtime integrates with Docker and Kubernetes, making it simple to run sandboxed containers."

This post was a bit theoretical and represents my understanding of the Kubernetes containers runtime jungle. I think it explains the current situation, the progress made and give a glimpse of the future.

Lot of evolutions have appeared since docker was born. Standardisation is a natural evolution when features and actors growth.

A better architecture took place in the Kubernetes container runtime world through CRI with separation of concerns and tools dedicated to specific tasks.

Kata container, gVisor and others virtualisation system inside kubernetes are young but very promising technologies.

The usage of those new technologies around virtualization within Kubernetes combined with the announce of VMware on the Pacific project (putting K8s in the heart of the vsphere platform), show (from my point of view) the futur convergence of containers with virtual machines.