By Romain Boulanger.

When it comes to deployment in Kubernetes, it’s hard not to mention GitOps. Indeed, this practice is tending to become standardized and the most widely used approach.

To introduce this concept very quickly, Git will contain our deployment files (Helm charts, Kustomize files, YAML files, etc.) and the contents of this code repository will be synchronized thanks to a tool installed beforehand in the cluster. As you can see, Git will become the source of truth for the desired state of our objects.

Another advantage of Git is the readability aspect, where everything is traced with commits within the source manager, making it possible to validate new components or updates through Merge Requests or Pull Requests.

Finally, if you want to find out more about GitOps, take a look at these articles:

Although similar in their GitOps approach, these two tools do have a few differences.

When it comes to synchronization, Argo CD’s default operation is manual, requiring a user action to trigger it, whereas Flux CD is fully automatic by default.

As a general guideline, we will use a Merge/Pull requests system, synchronising only the main branch.

For reconciliation in the event of drift, Argo CD offers certain options which are optional, whereas Flux CD uses a totally automatic reconciliation loop with no parameter setting, particularly for Kustomize.

More conveniently, it is possible to select the resources to be synchronized with Argo CD, while Flux CD will take the entire contents of the code repository.

When a rollback is made, the behavior is different, especially on Helm charts. Thanks to its controllers, Flux CD can go back to an earlier version of a chart, whereas Argo CD, will use a commit number to go back to an earlier version because it applies the contents of the helm template command.

Least but not last: permission management. Flux CD uses Kubernetes’ native role-based access control (RBAC) layer, whereas Argo CD integrates its own system, in the form of a ConfigMap with groups, roles and permissions definition.

We’ll look at the main advantages of the two tools later, but the idea is to compare several key points.

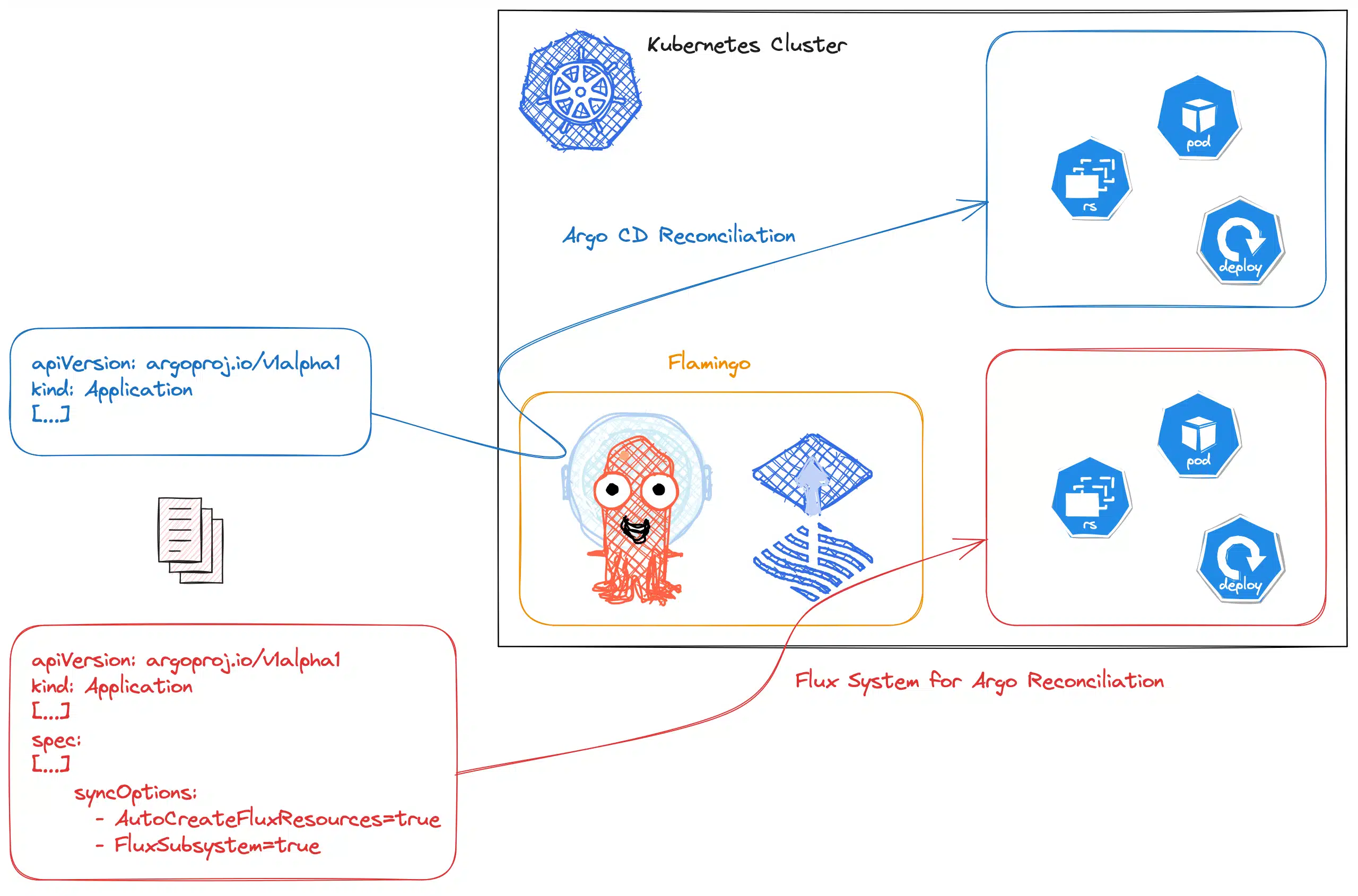

Why choose when you can have both? That’s what Flamingo offers, sponsored by Weaveworks, while remaining totally open source.

Flamingo builds on the strengths of Argo CD:

A streamlined User Interface for visualizing deployed resources. This can be seen as a control tower for all deployments;

Custom Resource Definitions** (CRDs) such as AppProject, Application and ApplicationSet, which enable us to isolate and deploy our resources with a wide range of settings and options.

But also with the performance of the Flux CD reconciliation engine, based on controllers. Whether it’s for Helm, Kustomize or even Terraform with the tf-controller for deploying infrastructure as Code on AWS, Azure, Google Cloud or other GitOps platforms.

To put it simply, Flamingo is a modified Argo CD on which it is possible to take advantage of the Flux System for Argo (FSA), enabling the Flux CD reconciliation loop to be used instead of the Argo CD reconciliation loop. However, this choice is completely configurable.

Nevertheless, it is perfectly possible to use both methods (Argo CD or FSA) within the same instance for different deployments: compatibility is fully managed and maintained.

To use the Flux System for Argo, you need to configure two synchronisation options in your Applications or ApplicationSet:

syncOptions:

- AutoCreateFluxResources=true

- FluxSubsystem=trueTo conclude this section, here is a summary of the possibilities offered by Flamingo:

Now it’s time to discover Flamingo!

To install Flamingo and do it by yourself, you will need a Kubernetes cluster, the command line tools Flux and Flamingo.

Note that Flamingo can use an existing Argo CD installation as explained in this guide.

This is what I recommend for a permanent installation using the Argo CD Helm chart. This will need to be overloaded with Flamingo images for all components (argocd-server, argocd-repo-server, etc.) as there is currently no official chart.

Personally, I use a kind cluster with two nodes (controlplane and worker) using the following configuration:

cat > kind-flamingo.yaml <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

EOF

kind create cluster --config kind-flamingo.yaml --name flamingoThe first step is to install Flux CD inside the cluster:

flux installThen Flamingo:

flamingo install --version=v2.8.6Once installed, the argocd and flux-system namespaces are created:

$ kubectl get ns

NAME STATUS AGE

argocd Active 90s

default Active 3m49s

flux-system Active 2m35s

[...]Interestingly, within the argocd namespace, the images of the main Argo CD components are replaced by those of Flamingo:

$ kubectl -n argocd get deployments argocd-server argocd-applicationset-controller argocd-notifications-controller argocd-repo-server -o yaml | grep image:

image: ghcr.io/flux-subsystem-argo/fsa/argocd:v2.8.6-fl.21-main-ff4f071a

image: ghcr.io/flux-subsystem-argo/fsa/argocd:v2.8.6-fl.21-main-ff4f071a

image: ghcr.io/flux-subsystem-argo/fsa/argocd:v2.8.6-fl.21-main-ff4f071a

image: ghcr.io/flux-subsystem-argo/fsa/argocd:v2.8.6-fl.21-main-ff4f071a

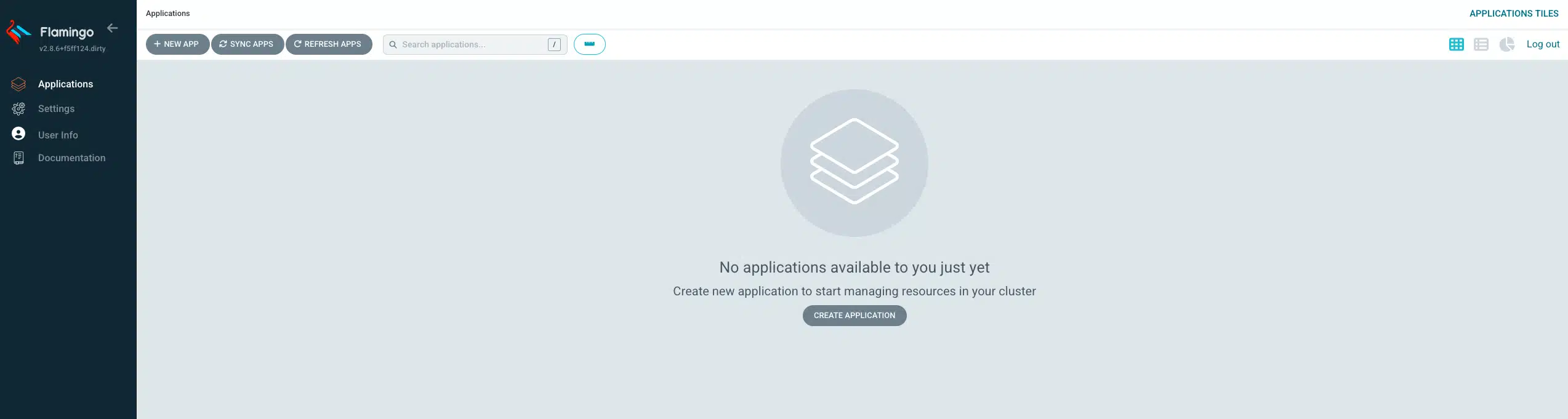

image: ghcr.io/flux-subsystem-argo/fsa/argocd:v2.8.6-fl.21-main-ff4f071aLet’s take a look at the GUI, but first we need to retrieve the password set during installation.

Flamingo has a out of the box command to get the password associated with the admin user:

flamingo show-init-passwordYou can use the port-forward command to access the user interface without needing to configure a NodePort service or an Ingress :

kubectl port-forward -n argocd svc/argocd-server 8080:443The Argo CD interface is identical except for the logo, which has been changed.

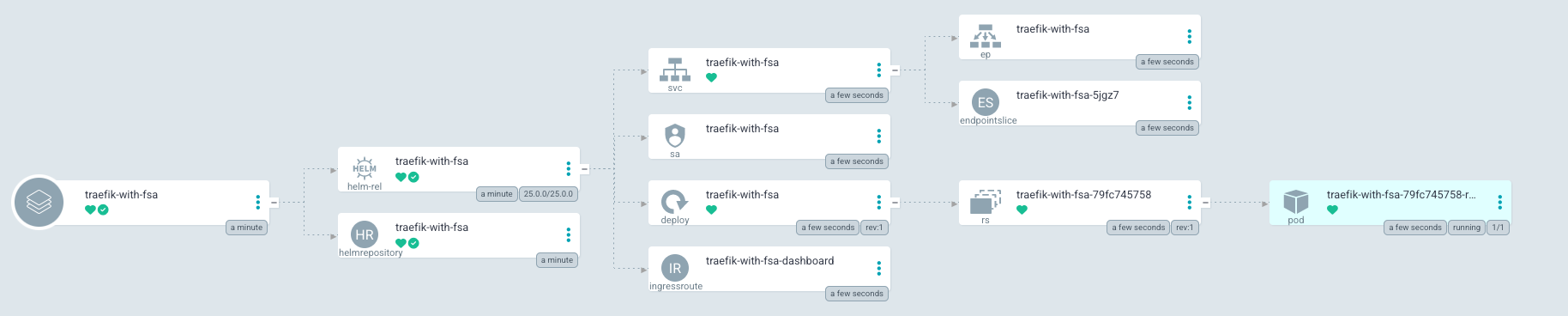

Once the installation is complete, the aim is to test two deployments:

Application that installs a Helm chart;AutoCreateFluxResources=true and FluxSubsystem=true to get the Flux System for Argo (FSA).For this example, I chose to use the Helm Traefik chart, which is my favourite Ingress Controller! To do so, here are my two deployments in Application format:

cat > traefik-without-fsa.yaml <<EOF

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: traefik-without-fsa

namespace: argocd

spec:

project: default

source:

chart: traefik

repoURL: https://traefik.github.io/charts

targetRevision: 25.0.0

helm:

releaseName: traefik

values: |

service:

type: NodePort

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

destination:

namespace: traefik-without-fsa

server: 'https://kubernetes.default.svc'

EOFThere’s nothing very complicated about this, just specify the chart, its version and the url for retrieving it, as well as specifying the local cluster for install it. The values section avoids using the LoadBalancer mode, because it cannot be deployed natively with kind.

On the syncPolicy side, automatic mode for synchronisation has been configured with remediation (selfHeal) and deletion of old resources (prune).

cat > traefik-with-fsa.yaml <<EOF

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: traefik-with-fsa

namespace: argocd

spec:

project: default

source:

chart: traefik

repoURL: https://traefik.github.io/charts

targetRevision: 25.0.0

helm:

releaseName: traefik

values: |

service:

type: NodePort

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

- AutoCreateFluxResources=true

- FluxSubsystem=true

destination:

namespace: traefik-with-fsa

server: 'https://kubernetes.default.svc'

EOFNo major difference beyond our two configured options.

Now it’s time to apply our two files within the cluster with the command kubectl create -f:

kubectl create -f traefik-without-fsa.yaml

kubectl create -f traefik-with-fsa.yamlComparing the traefik-without-fsa Application with traefik-with-fsa, we can tell that two additional objects are created: HelmRelease and HelmRepository correspond to the objects handled by the Flux CD controller.

$ kubectl -n traefik-with-fsa get helmrepositories.source.toolkit.fluxcd.io

NAME URL AGE READY STATUS

traefik-with-fsa https://traefik.github.io/charts 4m19s True stored artifact: revision 'sha256:fc9276fb5f5dbcbf524bdd84ce67771c128fdfeb598208e3b6445062e4d79d37'

$ kubectl -n traefik-with-fsa get helmreleases.helm.toolkit.fluxcd.io

NAME AGE READY STATUS

traefik-with-fsa 4m37s True Release reconciliation succeededIt is indeed the FSA reconciliation loop that used the Kubernetes objects when the second Application was created.

Due to the early release of Flamingo, some parameters are not yet supported, such as the valuesObject field which doesn’t pass overloaded values to the CD Flow controller, so values should be used as in the example above.

Finally, if the traefik-with-fsa Application is deleted, objects handled by Flux CD are not deleted automatically. This behaviour is totally normal for the moment. This is because the configuration is translated into the Flux CD language and it is Flux CD that handles the object lifecycle independently of the Application lifecycle.

Flamingo appears to be a tool that bridges the gap between the two references of the GitOps world in Kubernetes.

By having pros of both sides, it ensures that Argo CD enthusiasts won’t get confused by having huge deployment possibilities offered by the Application and ApplicationSet objects.

For Flux CD fellows who want to keep having customised controllers like Terraform and the tool’s own reconciliation loop, this will be an opportunity to have a centralised view of deployments carried out on the cluster.

However, Flamingo is still a fairly early tool that needs to be tested before it can be used in production contexts.

Check the following links related to this blog post and see how SoKube can help you :